In the earlier articles, I described about MPEG and its different versions that are commonly used. MPEG is for the compression of Audio-Video data. But the standard used for the compression of photographic images is JPEG. This article gives you a brief idea about JPEG.

The JPEG group was approved in 1994 as ISO-10918-1.

Friday 6 March 2009

JPEG: Joint Photographic Experts Group

0 comments Posted by Sukunath B A at 3/06/2009 09:03:00 am

Labels: Technical-Electronics

MPEG 7: Multimedia Content Description Interface

MPEG 7 is formally known as Multimedia Content Description Interface. MPEG 7 is not aimed at a single application. The elements that MPEG 7 standardizes supports broad range of applications.It is a standard for describing the multimedia content data that supports some degree of interpretation of the information meaning, which can be passed onto, or accessed by, a device or a computer code.

The MPEG-7 Standard consists of the following parts:

1. Systems – the tools needed to prepare MPEG-7 descriptions for efficient transport and storage and the terminal architecture.

2. Description Definition Language - the language for defining the syntax of the MPEG-7 Description Tools and for defining new Description Schemes.

3. Visual – the Description Tools dealing with (only) Visual descriptions.

4. Audio – the Description Tools dealing with (only) Audio descriptions.

5. Multimedia Description Schemes - the Description Tools dealing with generic features and multimedia descriptions.

6. Reference Software - a software implementation of relevant parts of the MPEG-7 Standard with normative status.

7. Conformance Testing - guidelines and procedures for testing conformance of MPEG-7 implementations

8. Extraction and use of descriptions – informative material about the extraction and use of some of the Description Tools.

9. Profiles and levels - provides guidelines and standard profiles.

10. Schema Definition - specifies the schema using the Description Definition Language

querrymail@gmail.com

0 comments Posted by Sukunath B A at 3/06/2009 06:00:00 am

Labels: DSP Articles, Technical-Electronics

Thursday 5 March 2009

A Peep into MPEG 4

MPEG 4 is audio-video compression standard similar to MPEG 1 and MPEG 2 adapting all the features of MPEG 1 and 2 along new features such as (extended) VRML support for 3D rendering, object-oriented composite files (including audio, video and VRML objects), support for externally-specified Digital Rights Management and various types of interactivity. Its formal designation is ISO/IEC 14496.

MPEG 4 has been proven to be successful in three fields:

- Digital television

- Interactive graphics applications

- Interactive multimedia

Part 1: System- Describes synchronisation and multiplexing of Audio and video.

Part 2: Visual- Visual data compression.

Part 3: Audio- for perpectual coding of audio signals.

Part 4: Conformance- Describe procedures for testing other parts.

Part 5: Reference Software- For demonstrating and clarifying other parts of the standard.

Part6: Delivery Multimedia Integration framework

Part 7: Optimized Reference Software

Part 8: Carriage on IP networks

Part 9: Reference Hardware

Part 10: Advanced Video Coding (AVC)

Part 11: Scene description and Application engine("BIFS")

Part 12: ISO Base Media File Format- File format for storing media content

Part 13: Intellectual Property Management and Protection (IPMP) Extensions.

Part 14: MPEG-4 File Format

Part 15: AVC File Format

Part 16: Animation Framework eXtension (AFX).

Part 17: Timed Text subtitle format.

Part 18: Font Compression and Streaming.

Part 19: Synthesized Texture Stream.

Part 20: Lightweight Application Scene Representation (LASeR).

Part 21: MPEG-J Graphical Framework eXtension (GFX)

Part 22: Open Font Format Specification (OFFS) based on OpenType

Part 23: Symbolic Music Representation (SMR)

querrymail@gmail.com

0 comments Posted by Sukunath B A at 3/05/2009 06:00:00 am

Labels: DSP Articles, Technical-Electronics

Wednesday 4 March 2009

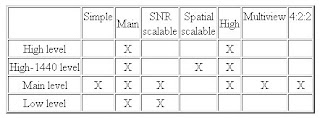

About MPEG 2

MPEG 2 is another video standard developed by MPEG Group, but it is not a successor of MPEG1. Both MPEG 1 and MPEG 2 have their own functions: MPEG 1 is for low band width purposes and MPEG 2 for high bandwidth/broadband purposes. The international standard number of MPEG 2 is ISO 13818.

MPEG 2 is commonly used in Digital TVs, DVD Videos, SVCDs etc. Some Blu-ray disks also uses it.

The maximum bit rate available for MPEG-2 streams are 10.08 Mbit/s and the minimum are 300 kbit/s.

Resolutions that video streams can use, are:

720x480 (NTSC, only with MPEG-2)

720x576 (PAL, only with MPEG-2)

704x480 (NTSC, only with MPEG-2)

704x576 (PAL, only with MPEG-2)

352x480 (NTSC, MPEG-2 & MPEG-1)

352x576 (PAL, MPEG-2 & MPEG-1)

352x240 (NTSC, MPEG-2 & MPEG-1)

352x288 (PAL, MPEG-2 & MPEG-1)

The technical title for MPEG 2 is: "Generic coding of moving pictures and associated audio information".

MPEG-2 is a standard currently in 9 parts.

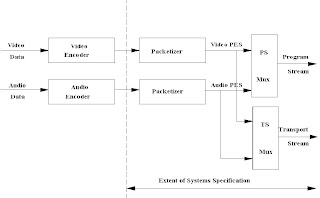

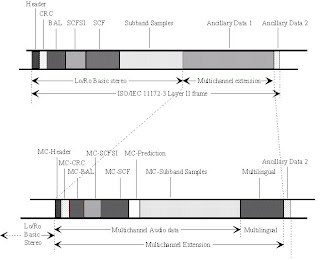

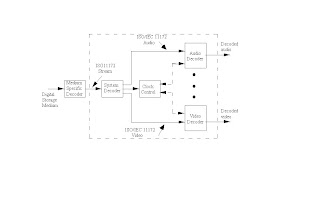

Part 1 of MPEG-2 addresses combining of one or more elementary streams of video and audio, as well as, other data into single or multiple streams which are suitable for storage or transmission. This is specified in two forms: the Program Stream and the Transport Stream. Each is optimized for a different set of applications. A model is given in Figure 1 below.

The Transport Stream combines one or more Packetized Elementary Streams (PES) with one or more independent time bases into a single stream. Elementary streams sharing a common timebase form a program. The Transport Stream is designed for use in environments where errors are likely, such as storage or transmission in lossy or noisy media. Transport stream packets are 188 bytes long.

Part 4 and 5 of MPEG-2 correspond to part 4 and 5 of MPEG-1.

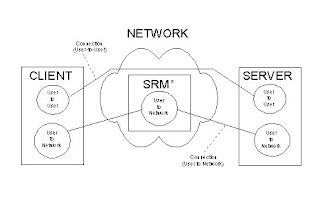

Part 6 of MPEG-2 - Digital Storage Media Command and Control (DSM-CC) is the specification of a set of protocols which provides the control functions and operations specific to managing MPEG-1 and MPEG-2 bitstreams. These protocols may be used to support applications in both stand-alone and heterogeneous network environments. In the DSM-CC model, a stream is sourced by a Server and delivered to a Client. Both the Server and the Client are considered to be Users of the DSM-CC network. DSM-CC defines a logical entity called the Session and Resource Manager (SRM) which provides a (logically) centralized management of the DSM-CC Sessions and Resources.

Part 7 of MPEG-2 will be the specification of a multichannel audio coding algorithm not constrained to be backwards-compatible with MPEG-1 Audio.

Part 8 of MPEG-2 was originally planned to be coding of video when input samples are 10 bits.

Part 9 of MPEG-2 is the specification of the Real-time Interface (RTI) to Transport Stream decoders which may be utilized for adaptation to all appropriate networks carrying Transport Streams.

Part 10 is the conformance testing part of DSM-CC.

querrymail@gmail.com

0 comments Posted by Sukunath B A at 3/04/2009 07:19:00 am

Labels: DSP Articles, Technical-Electronics

Tuesday 3 March 2009

More About MPEG 1

The MPEG-1 Audio and video compression format was developed by MPEG group back in 1993. The Official description for it is "Coding of moving pictures and associated audio for digital storage media at up to about 1,5 Mbit/s".

MPEG-1 is the video format that has had some extremely popular spin-offs and sideproducts, most notably MP3 and VideoCD.

MPEG-1's compression method is based on re-using the existing frame material and using psychological and physical limitations of human senses. MPEG-1 video compression method tries to use previous frame's information in order to reduce the amount of

information the current frame requires. Also, the audio encoding uses something that's called psychoacoustics -- basically compression removes the high and low frequencies a normal human ear cannot hear.

Resolutions that video streams can use are:

352x480 (NTSC, MPEG-2 & MPEG-1)

352x576 (PAL, MPEG-2 & MPEG-1)

352x240 (NTSC, MPEG-2 & MPEG-1)

352x288 (PAL, MPEG-2 & MPEG-1)

MPEG-1 standard consists of five parts: named as Part 1 to Part 5.

Part 1 addresses the problem of combining one or more data streams

from the video and audio parts of the MPEG-1 standard with timing

information to form a single stream as in figure.

This is an important fuction because, once combined

into a single stream, the data are in a form well

suited to digital

storage or transmission.

Part 2 specifies a coded representation that can be used for compressing video sequences - both 625-line and 525-lines - to bitrates around 1,5 Mbit/s. Part 2 was developed to operate principally from storage media offering a continuous transfer rate of about 1,5 Mbit/s.

A number of techniques are used to achieve a high compression ratio. The first is to select an appropriate spatial resolution for the signal. The algorithm then uses block-based motion compensation to reduce the temporal redundancy. Motion compensation is used for causal prediction of the current picture from a previous picture, for non-causal prediction of the current picture from a future picture, or for interpolative prediction from past and future pictures. The difference signal, the prediction error, is further compressed using the discrete cosine transform (DCT) to remove spatial correlation and is then quantised. Finally, the motion vectors are combined with the DCT information, and coded using variable length codes.

The figure below illustrates a possible combination of the three

main types of pictures that are used in the standard.

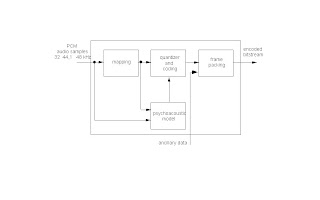

Part 3 specifies a coded representation that can be used for compressing audio sequences - both mono and stereo. The algorithm is illustrated in Figure 3 below. Input audio samples are fed into

the encoder. The mapping creates a filtered and subsampled representation of the input audio stream. A psychoacoustic model creates a set of data to control the quantiser and coding. The

quantiser and coding block creates a set of coding symbols from the mapped input samples. The block 'frame packing' assembles the actual bitstream from the output data of the other blocks, and adds other information (e.g. error correction) if necessary.

Part 4 specifies how tests can be designed to verify whether bit streams and decoders meet the requirements as specified in parts 1, 2 and 3 of the MPEG-1 standard. These tests can be used by:

manufacturers of encoders, and their customers, to verify whether

the encoder produces valid bit streams.

manufacturers of decoders and their customers to verify whether

the decoder meets the requirements specified in parts 1,2

and 3 of the standard for the claimed decoder capabilities.

applications to verify whether the characteristics of a given

bit stream meet the application requirements, for example

whether the size of the coded picture does not exceed the

maximum value allowed for the application.

Part 5, technically not a standard, but a technical report, gives a full software implementation of the first three parts of the MPEG-1 standard.

querrymail@gmail.com

0 comments Posted by Sukunath B A at 3/03/2009 07:23:00 am

Labels: DSP Articles, Technical-Electronics

MPEG Compression

MPEG uses an asymmetric compression method. Compression under MPEG is far more complicated than decompression, making MPEG a good choice for applications that need to write data only once, but need to read it many times. An example of such an application is an archiving system.

MPEG uses two types of compression methods to encode video data: interframe and intraframe encoding.

Interframe encoding is based upon both predictive coding and interpolative coding techniques, as described below.

When capturing frames at a rapid rate (typically 30 frames/second for real time video) there will be a lot of identical data contained in any two or more adjacent frames. If a motion compression method is aware of this temporal redundancy, then it need not encode the entire frame of data, as is done via intraframe encoding. Instead, only the differences (deltas) in information between the frames is encoded. This results in greater compression ratios, with far less data needing to be encoded. This type of interframe encoding is called predictive encoding.

A further reduction in data size may be achieved by the use of bi-directional prediction. Differential predictive encoding encodes only the differences between the current frame and the previous frame. Bi-directional prediction encodes the current frame based on the differences between the current, previous, and next frame of the video data. This type of interframe encoding is called motion-compensated interpolative encoding.

To support both interframe and intraframe encoding, an MPEG data stream contains three types of coded frames:

* I-frames (intraframe encoded) * P-frames (predictive encoded) * B-frames (bi-directional encoded)

An I-frame contains a single frame of video data that does not rely on the information in any other frame to be encoded or decoded. Each MPEG data stream starts with an I-frame.

A P-frame is constructed by predicting the difference between the current frame and closest preceding I- or P-frame. A B-frame is constructed from the two closest I- or P-frames. The B-frame must be positioned between these I- or P-frames.

A typical sequence of frames in an MPEG stream might look like this:

IBBPBBPBBPBBIBBPBBPBBPBBI

In theory, the number of B-frames that may occur between any two I- and P-frames is unlimited. In practice, however, there are typically twelve P- and B-frames occurring between each I-frame. One I-frame will occur approximately every 0.4 seconds of video run time.

Remember that the MPEG data is not decoded and displayed in the order that the frames appear within the stream. Because B-frames rely on two reference frames for prediction, both reference frames need to be decoded first from the bit stream, even though the display order may have a B-frame in between the two reference frames.

In the previous example, the I-frame is decoded first. But, before the two B-frames can be decoded, the P-frame must be decoded, and stored in memory with the I-frame. Only then may the two B-frames be decoded from the information found in the decoded I- and P-frames. Assume, in this example, that you are at the start of the MPEG data stream. The first ten frames are stored in the sequence IBBPBBPBBP (0123456789), but are decoded in the sequence:

IPBBPBBPBB (0312645978)

and finally are displayed in the sequence:

IBBPBBPBBP (0123456789)

Once an I-, P-, or B-frame is constructed, it is compressed using a DCT compression method similar to JPEG. Where interframe encoding reduces temporal redundancy (data identical over time), the DCT-encoding reduces spatial redundancy (data correlated within a given space). Both the temporal and the spatial encoding information are stored within the MPEG data stream.

By combining spatial and temporal sub-sampling, the overall bandwidth reduction achieved by MPEG can be considered to be upwards of 200:1. However, with respect to the final input source format, the useful compression ratio tends to be between 16:1 and 40:1. The ratio depends upon what the encoding application deems as "acceptable" image quality (higher quality video results in poorer compression ratios). Beyond these figures, the MPEG method becomes inappropriate for an application.

In practice, the sizes of the frames tend to be 150 Kbits for I-frames, around 50 Kbits for P-frames, and 20 Kbits for B-frames. The video data rate is typically constrained to 1.15 Mbits/second, the standard for DATs and CD-ROMs.

The MPEG standard does not mandate the use of P- and B-frames. Many MPEG encoders avoid the extra overhead of B- and P-frames by encoding I-frames. Each video frame is captured, compressed, and stored in its entirety, in a similar way to Motion JPEG. I-frames are very similar to JPEG-encoded frames. In fact, the JPEG Committee has plans to add MPEG I-frame methods to an enhanced version of JPEG, possibly to be known as JPEG-II.

With no delta comparisons to be made, encoding may be performed quickly; with a little hardware assistance, encoding can occur in real time (30 frames/second). Also, random access of the encoded data stream is very fast because I-frames are not as complex and time-consuming to decode as P- and B-frames. Any reference frame needs to be decoded before it can be used as a reference by another frame.

There are also some disadvantages to this scheme. The compression ratio of an I-frame-only MPEG file will be lower than the same MPEG file using motion compensation. A one-minute file consisting of 1800 frames would be approximately 2.5Mb in size. The same file encoded using B- and P-frames would be considerably smaller, depending upon the content of the video data. Also, this scheme of MPEG encoding might decompress more slowly on applications that allocate an insufficient amount of buffer space to handle a constant stream of I-frame data.

querrymail@gmail.com

0 comments Posted by Sukunath B A at 3/03/2009 07:08:00 am

Labels: DSP Articles, Technical-Electronics

Monday 2 March 2009

MPEG: Moving Picture Expert Group

MPEG stands for Moving Picture Experts Group. It is a family of standards used for coding audio-visual information (e.g., movies, video, music) in a digital compressed format.

The major advantage of MPEG compared to other video and audio coding formats is that MPEG files are much smaller for the same quality. This is because MPEG uses very sophisticated compression techniques.

Moving Picture Experts Group (MPEG) a working group of ISO/IEC in charge of the development of standards for coded representation of digital audio and video. Established in 1988, the group has produced the following compression mechanisms:

MPEG-1: The standard on which such products as Video CD and MP3

MPEG-2: The standard on which such products as Digital Television set top boxes and DVD are based.

MPEG-4: The standard for multimedia for the fixed and mobile web.

MPEG-7: The standard for description and search of audio and visual content.

MPEG-21: The Multimedia Framework.

In addition to these consolidated standards, MPEG has started a number of new standard lines:

MPEG-A: Multimedia application format.

MPEG-B: MPEG systems technologies.

MPEG-C: MPEG video technologies.

MPEG-D: MPEG audio technologies.

MPEG-E: Multimedia Middleware.

MPEG is a working group of ISO, the International Organization for Standardization. Its formal name is ISO/IEC JTC 1/SC 29/WG 11. The title is: Coding of moving pictures and audio.

MPEG held its first meeting in May 1988 in Ottawa, Canada. By late 2005, MPEG has grown to include approximately 350 members per meeting from various industries, universities, and research institutions. Now MPEG has become an inevitable part of modern life.

querrymail@gmail.com

0 comments Posted by Sukunath B A at 3/02/2009 06:46:00 pm

Labels: DSP Articles, Technical-Electronics